Developing moral models for AI systems

Healthcare, mobility, the defence industry. Just some of the domains where the choices we make today can have a lasting impact on people and societies. It is therefore vital that values such as safety, trust and well-being are integrated in the decision-making process. And when AI systems are added to the equation, that responsibility weighs even more heavily. AI systems need to act and react according to human morals. So how far have we come in developing an AI system that we can trust to do the right thing?

Building ethics into AI systems

To answer that question, we first need to understand our own moral compass. What do we consider right and wrong behaviour in a specific context? What values are relevant and how do we apply those values when making a split-second decision?

Let’s take driving as the most obvious example. How do we weigh traffic safety against traffic rules? In other words, at what point does logical thinking overrule the regulations, for instance pulling over illegally to allow an ambulance to pass. To ensure AI systems assess situations based on our own behaviour, they need to work from a solid framework we consider morally just.

Adapting to changing values

Aside from the moral considerations, we need to examine how an AI system can exhibit the right behaviour. This involves incorporating relevant values early on in the design and development stages. It is furthermore required to acknowledge that human values differ across different countries and cultures, and that they change over time. For an AI system to ‘think for itself’ in a way that meets today’s and tomorrow’s standards, the system must be adapted to our ever-changing values.

The next logical question is this: What guarantee do we have that a system abides by these changing values? Road safety for autonomous driving can partially be guaranteed within a clearly defined framework, for example by maximising the system’s speed limit to the local regulations. But this is not sufficient, as there are many other, more complex circumstances that require the AI system to act in our human interest. One solution is therefore to implement a moral model in the car, containing a specification of values in a format that can be interpreted by the car, thereby enabling the system to calculate the morally just behaviour in every imaginable situation.

Meaningful human control is key

The goal when developing any AI system should always be to guarantee that these systems exhibit the right behaviour. Only when this is achieved, can we fully trust the system to make impactful decisions on our behalf. Essential to this is maintaining Meaningful Human Control over AI systems, i.e. being able to correct certain behaviour to match the system to our own moral compass. The AI system needs to be able to justify its actions and the advice it gives to us based on the values that are part of our moral compass.

TNO’s Early Research Programme (ERP) on MMAIS, or Moral Models for AI Safety, aims to establish broadly accepted methods that stakeholders in every domain can draw on when applying AI in their own systems and processes. The methods that MMAIS develops, are founded on the following four pillars:

- Value Identification: understanding what people find important, valuable and ethically responsible;

- Value Specification: translating those perceptions and considerations into specific behaviours;

- Value Implementation: ensuring that what humans find morally just behaviour is implemented in the AI system;

- Value Alignment: continuously evaluating the AI system’s behaviour, and adapting the behaviour to the relevant moral standard.

Developing moral models for AI systems is an ongoing process that acknowledges the fact that our values change over time, and that they differ per culture and context.

Within the MMAIS project, we develop methods that support the development of a moral model for an AI system to achieve and maintain morally just AI behaviour in a specific context.

Neem contact met ons op

Get inspired

Working on reliable AI

AI model for personalised healthy lifestyle advice

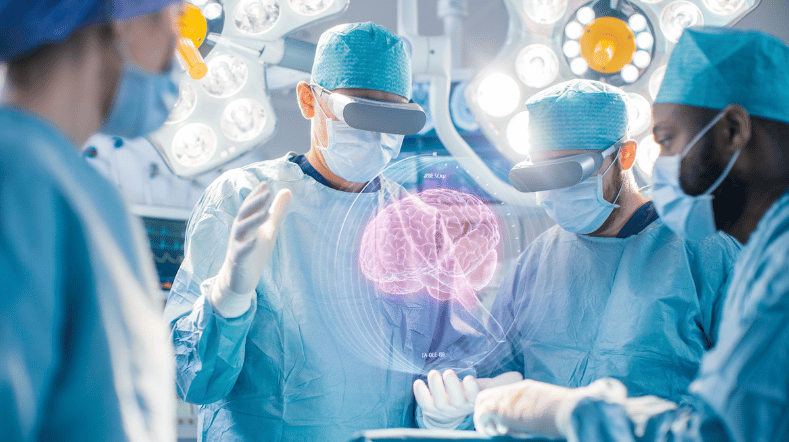

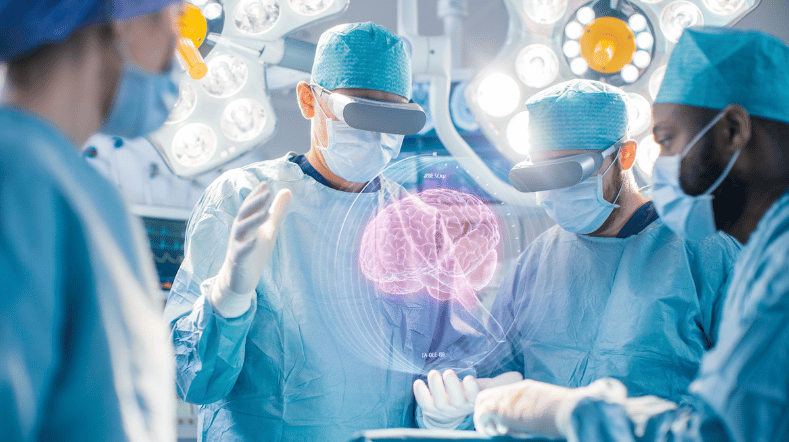

AI in training: FATE develops digital doctor's assistant

Boost for TNO facilities for sustainable mobility, bio-based construction and AI

GPT-NL boosts Dutch AI autonomy, knowledge, and technology