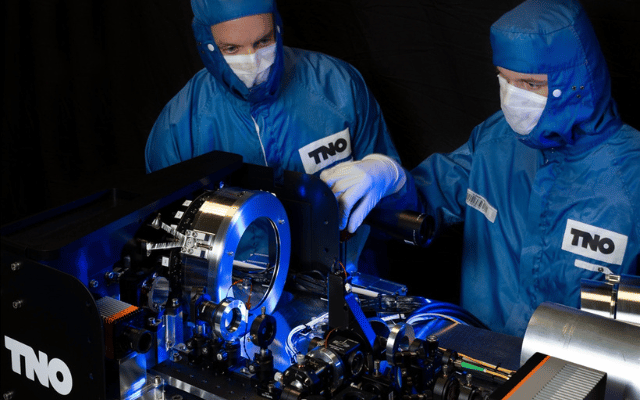

Collaboration with TNO

Creating innovations is a matter of working together, and that requires partners. That is why, every year, we team up with some 3,000 large multinationals, SMEs, universities and public-sector organisations within and outside the Netherlands to tackle challenges.

How we work

Knowledge development is a joint endeavour. That is something we notice every day at TNO. That is why companies, public-sector bodies and other organisations frequently work with us. And we are keen to work with these parties. The collaboration takes many forms, such as projects on commission, public private partnerships, technology transfer, partnerships via associations and Joint Innovation Centres (JIC's). Interested in finding out how we can work with you?

Projects on commission

If there is something you would like to have researched for your company, public-sector organisation or foundation, you can commission a research project. We will make agreements with you about the work, the cost, and the exclusivity of the results.

Public-private partnerships

Companies and public-sector and social organisations can also work with us in public-private partnerships, which can take the form of a short-term project or a long-term program.

SME

TNO Fast Track is TNO's platform for start-ups, scale-ups and SMEs, i.e. entrepreneurs. TNO Fast Track offers quick access to TNO's knowledge, facilities and network.

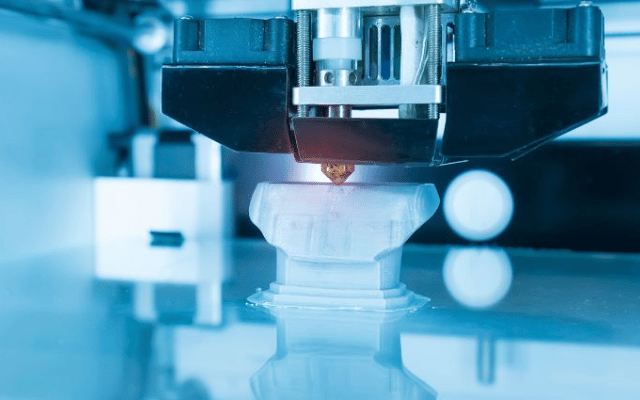

Technology Transfer

Technology Transfer, an important part of the valorization process, involves transferring technology to the market by establishing new companies (spin-offs), or by licensing existing companies. This is how TNO innovations are transformed into products, into economic activity and into high-quality jobs.

International

Research and innovation do not stop at national borders. We can only strengthen the knowledge base of the Netherlands if we work closely with leading international knowledge partners, companies and governments.

Overview of all project categories, their characteristics and conditions

| Project categories | Characteristics | Typical conditions | Examples |

|---|---|---|---|

| Assignment | TNO is 100% funded by customers |

|

|

| Public Private Partnership | TNO is mixed funded by public funds and by customers |

Public funding:

Public funding from external programs conditions:

Mixed funding models conditions:

|

|

| Technology Transfer | Depending on type of service | Support services for licensing TNO technology, participation in start/scale-ups and creation of TNO spin-offs | Tech Transfer |

| Partnerships via associations | TNO arranges its own funding |

|

|

| Partnerships via Joint Innovation Centres (JICs) | Depending on type of JIC | Depending on type of JIC, see for more information the specific JIC | Holst Centre, ESI, Solliance Solar, i-Botics, QuTech, Dutch Optics Centre, etc. |